15 KiB

Docker Engine

Docker Engine is a powerful tool that simplifies the process of creating, deploying, and managing applications using containers. Here's an introduction to Docker Engine and its basic functionalities:

What is Docker Engine?

Docker Engine is the core component of Docker, a platform that enables developers to package applications and their dependencies into lightweight containers. These containers can then be deployed consistently across different environments, whether it's a developer's laptop, a testing server, or a production system.

Basic Functionalities of Docker Engine

- Containerization: Docker Engine allows you to create and manage containers, which are isolated environments that package an application and its dependencies. Containers ensure consistency in runtime environments across different platforms.

- Image Management: Docker uses images as templates to create containers. Docker Engine allows you to build, push, and pull images from Docker registries (like Docker Hub or private registries). Images are typically defined using a Dockerfile, which specifies the environment and setup instructions for the application.

- Container Lifecycle Management: Docker Engine provides commands to start, stop, restart, and remove containers. It also manages the lifecycle of containers, including monitoring their status and resource usage.

- Networking: Docker Engine facilitates networking between containers and between containers and the outside world. It provides mechanisms for containers to communicate with each other and with external networks, as well as configuring networking options like ports and IP addresses.

- Storage Management: Docker Engine manages storage volumes that persist data generated by containers. It supports various storage drivers and allows you to attach volumes to containers, enabling data persistence and sharing data between containers and the host system.

- Resource Isolation and Utilization: Docker Engine uses Linux kernel features (such as namespaces and control groups) to provide lightweight isolation and resource utilization for containers. This ensures that containers run efficiently without interfering with each other or with the host system.

- Integration with Orchestration Tools: Docker Engine can be integrated with orchestration tools like Docker Swarm and Kubernetes for managing containerized applications at scale. Orchestration tools automate container deployment, scaling, and load balancing across multiple hosts.

Key Benefits of Docker Engine

- Consistency: Docker ensures consistency between development, testing, and production environments by encapsulating applications and dependencies into containers.

- Efficiency: Containers are lightweight and share the host system's kernel, reducing overhead and improving performance compared to traditional virtual machines.

- Portability: Docker containers can run on any platform that supports Docker, making it easy to move applications between different environments.

- Isolation: Containers provide a level of isolation that enhances security and stability, as each container operates independently of others on the same host.

Basic Steps to build Docker images

- Create a Dockerfile that provides instructions to build Docker image.

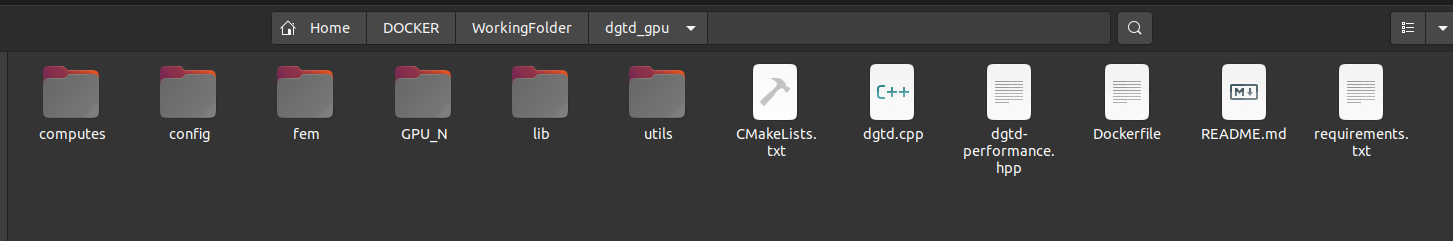

We can examine the contents of the Dockerfile.

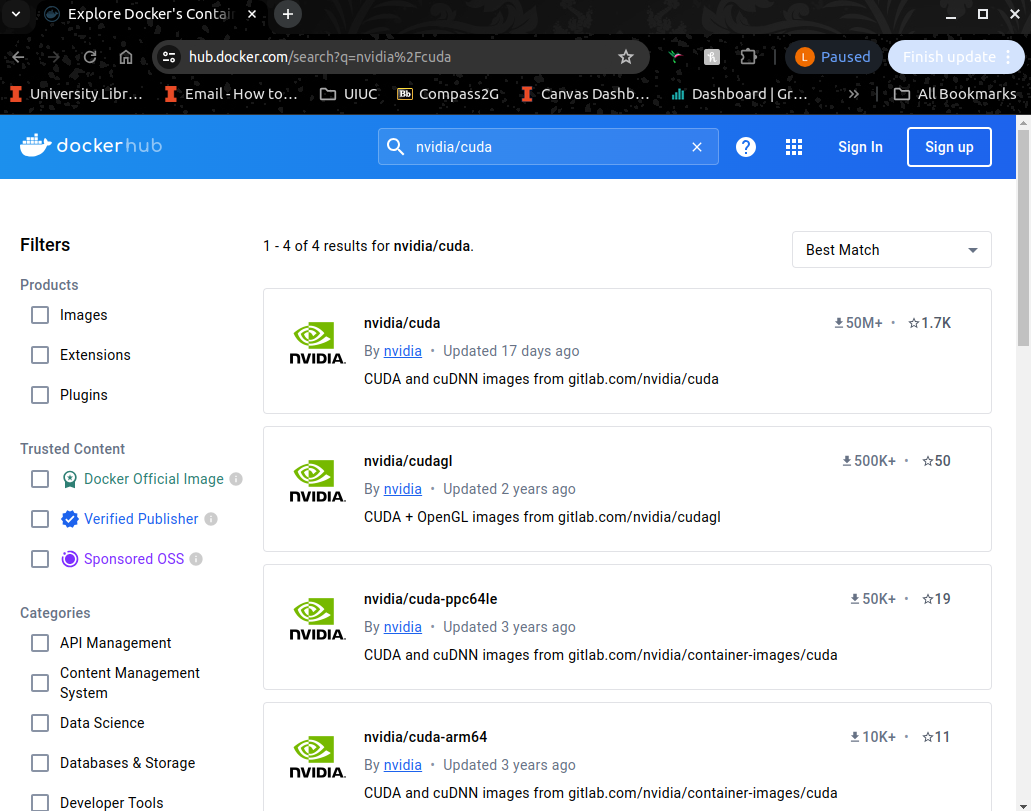

We're creating a Docker image by starting from an existing Docker image that includes a UNIX environment with CUDA runtime. Initially, we pull the base image from Docker's official repository. Specifically, we can locate suitable base images by searching on Docker Hub (https://hub.docker.com/search?q=nvidia%2Fcuda).

# -----------------------------------------------------------------------------------------

# Step 1 : Import a base image from online repository

# FROM nvidia/cuda:12.5.0-devel-ubuntu22.04

FROM nvidia/cuda:12.4.0-devel-ubuntu22.04

In addition, setting ENV DEBIAN_FRONTEND=noninteractive in a Dockerfile is a directive that adjusts the environment variable DEBIAN_FRONTEND within the Docker container during the image build process.

- Non-interactive Environment

Debian-based Linux distributions, including many Docker base images, use DEBIAN_FRONTEND to determine how certain package management tools (like apt-get) interact with users. Setting DEBIAN_FRONTEND=noninteractive tells these tools to run in a non-interactive mode. In this mode, the tools assume default behavior for prompts that would normally require user input, such as during package installation or configuration.

- Avoiding User Prompts

During Docker image builds, it's crucial to automate as much as possible to ensure consistency and reproducibility. Without setting DEBIAN_FRONTEND=noninteractive, package installations might prompt for user input (e.g., to confirm installation, choose configuration options). This interaction halts the build process unless explicitly handled in advance.

- Common Usage in Dockerfiles

In Dockerfiles, especially those designed for automated builds (CI/CD pipelines, batch processes), it's typical to include ENV DEBIAN_FRONTEND=noninteractive early on. This ensures that subsequent commands relying on package management tools proceed without waiting for user input.

# -----------------------------------------------------------------------------------------

# Step 2 : Suppress Interactive Prompts from Debian

ENV DEBIAN_FRONTEND=noninteractiveNext, we will need to install packages, libraries and set the environment variables that we need to compile or run Maxwell-TD.

his Dockerfile snippet outlines steps for setting up a Docker image with various libraries and tools typically required for scientific computing and development environments. Let's break down each part:

- Update system and install libraries

- Purpose: Updates the package list and installs a set of essential libraries and tools required for compiling and building various applications.

- Packages Installed:

- build-essential, g++, gcc: Compiler tools and libraries.

- cmake, gfortran: Build and Fortran compiler.

- Various development libraries (libopenblas-dev, liblapack-dev, libfftw3-dev, etc.) for numerical computations, linear algebra, and scientific computing.

- libvtk7-dev: Libraries for 3D computer graphics, visualization, and image processing.

- libgomp1, libomp-dev, libpthread-stubs0-dev: Libraries for multi-threading support.

- Install Compilers

-Purpose: Ensures that g++ and gcc are installed. These are essential compilers for C++ and C programming languages, often needed for compiling native code.

- Install Python and pip

-Purpose: Installs Python 3 and pip (Python package installer), which are essential for Python-based applications and managing Python dependencies.

- Copy current directory to docker image

-Purpose: Sets the working directory inside the Docker image to

/dgtdand copies all files from the current directory (presumably where the Dockerfile resides) into the/dgtddirectory inside the Docker image.

- Install Python dependencies

-Purpose: Installs Python dependencies listed in

requirements.txtfile located in the /dgtd directory. The--no-cache-dirflag ensures that no cached packages are used during installation, which can be important for Docker images to maintain consistency and avoid unexpected behavior.

- Set Path for libraries and CUDA

-Purpose: Sets environment variables related to CUDA (a parallel computing platform and programming model) if CUDA is used in the project. These variables define paths to CUDA libraries, binaries, headers, and compiler (

nvcc).

# -----------------------------------------------------------------------------------------

# Step 3 : Installing required packages and setting up environment variables

# Update system and install libraries

RUN apt-get update && apt-get install -y \

build-essential \

cmake \

gfortran \

libopenblas-dev \

liblapack-dev \

libfftw3-dev \

libmetis-dev \

libvtk7-dev \

libgomp1 \

libomp-dev \

libblas-dev \

libpthread-stubs0-dev \

&& rm -rf /var/lib/apt/lists/*

# Install Compilers

RUN apt-get update && apt-get install -y g++ gcc

# Install Python and pip

RUN apt-get install -y python3 python3-pip

# Copy current directory to docker image

WORKDIR dgtd

COPY . .

# Install Python dependencies

RUN pip install --no-cache-dir -v -r /dgtd/requirements.txt

# Set Path for libraries and CUDA

ENV CUDA_HOME /usr/local/cuda

ENV LD_LIBRARY_PATH ${CUDA_HOME}/lib64

ENV PATH ${CUDA_HOME}/:bin:${PATH}

ENV CPATH ${CUDA_HOME}/include:${CPATH}

ENV CUDACXX ${CUDA_HOME}/bin/nvccFinally, we can compile a program using cmake and make, and then sets up the Docker container to start a Bash shell upon running. CMD ["bash"] sets the default command to run inside the container. When the container is started without specifying a command, it will automatically launch a Bash shell.

# -----------------------------------------------------------------------------------------

# Step 4 : Compiling Program

RUN ls -l && mkdir build && cd build && cmake .. && make -j 4

CMD ["bash"]- Use the

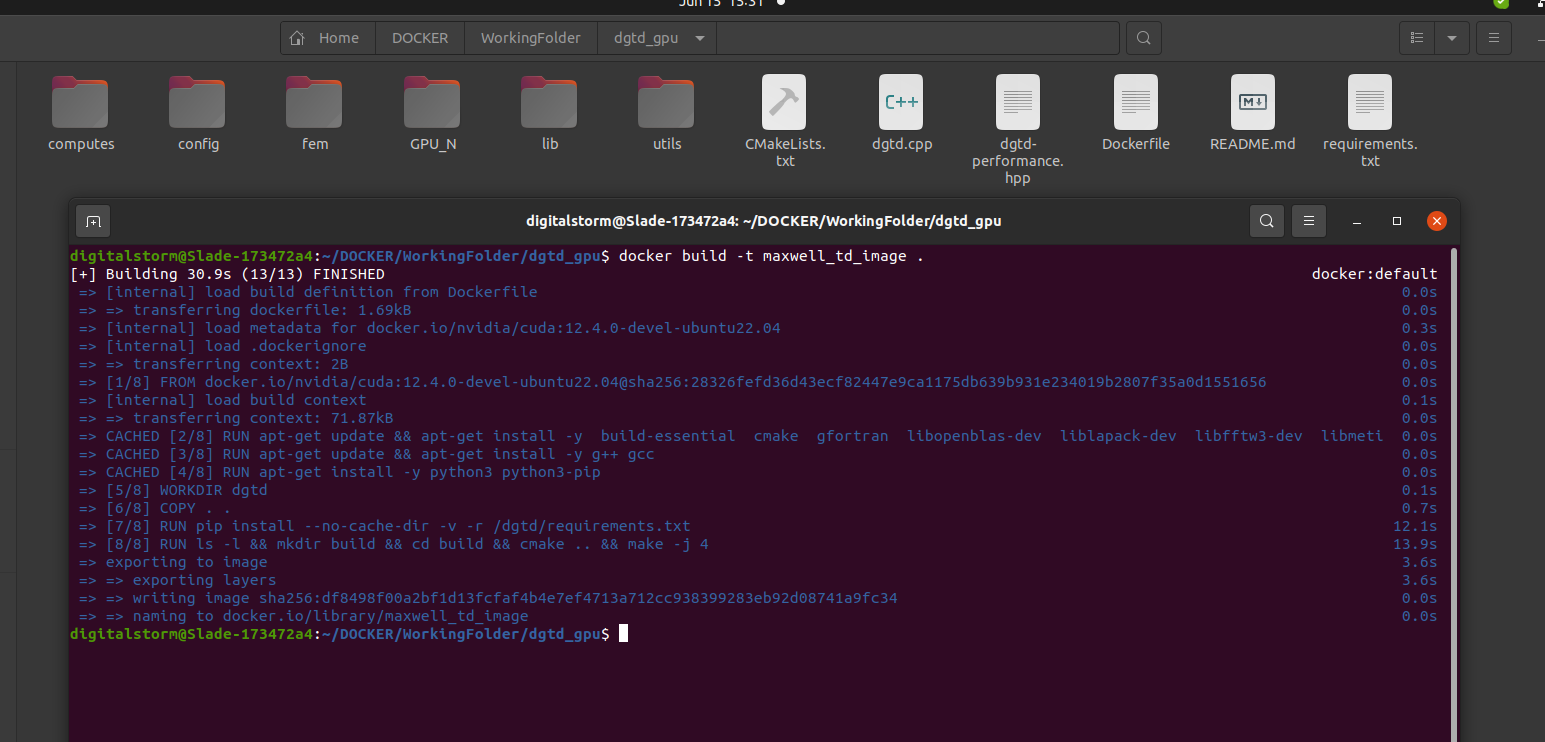

docker buildcommand to build the Docker image from your Dockerfile.

Once you have created your Dockerfile and saved it in your project directory, you can build a Docker image using the docker build command. Here's how you would do it:

docker build -t maxwell_td_image .docker build: This command tells Docker to build an image from a Dockerfile.-t maxwell_td_image: The -t flag is used to tag the image with a name (maxwell_td_image in this case). This name can be whatever you choose and is used to refer to this specific image later on..: This specifies the build context. The dot indicates that the Dockerfile and any other files needed for building the image are located in the current directory.

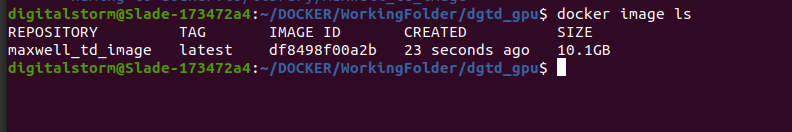

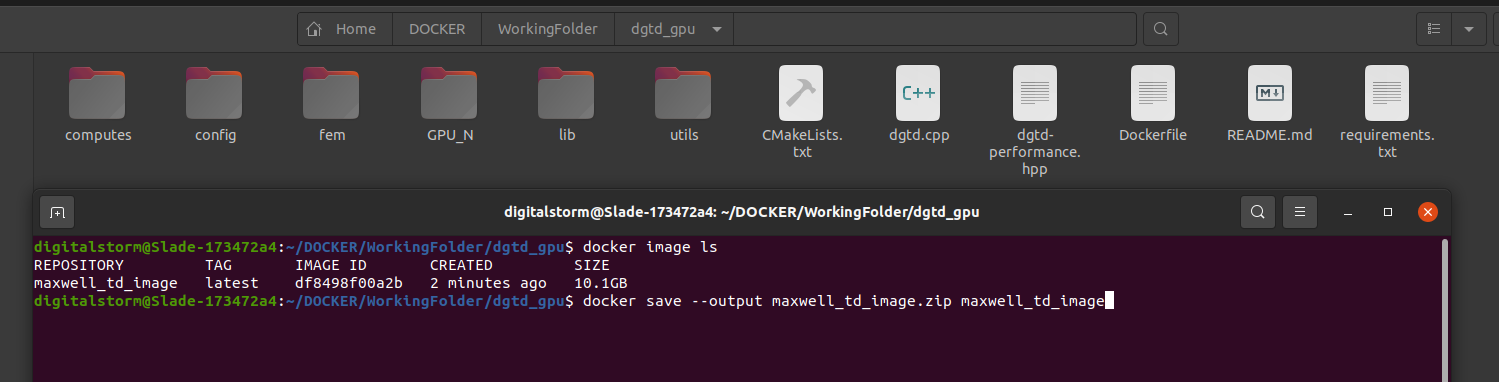

How to check images

To verify if a Docker image has been successfully built on your local system, you can use the docker images command. Here’s how you can do it:

- Open your terminal (Command Prompt on Windows or Terminal on macOS/Linux).

- Run the following command

docker images

docker images lsThis command will list all Docker images that are currently present on your local system. Each image listed will have columns showing its repository, tag, image ID, creation date, and size.

- Finding your image

Look through the list for the image you just built. If it was successfully built, it should appear in the list. Check the repository and tag names to identify your specific image. The repository name will likely be the name you assigned to it in your Dockerfile, and the tag will be latest or another tag you specified.

- Confirming successful build

If your image appears in the list with the correct details (repository name, tag, etc.), it indicates that Docker successfully built and stored the image on your local machine.

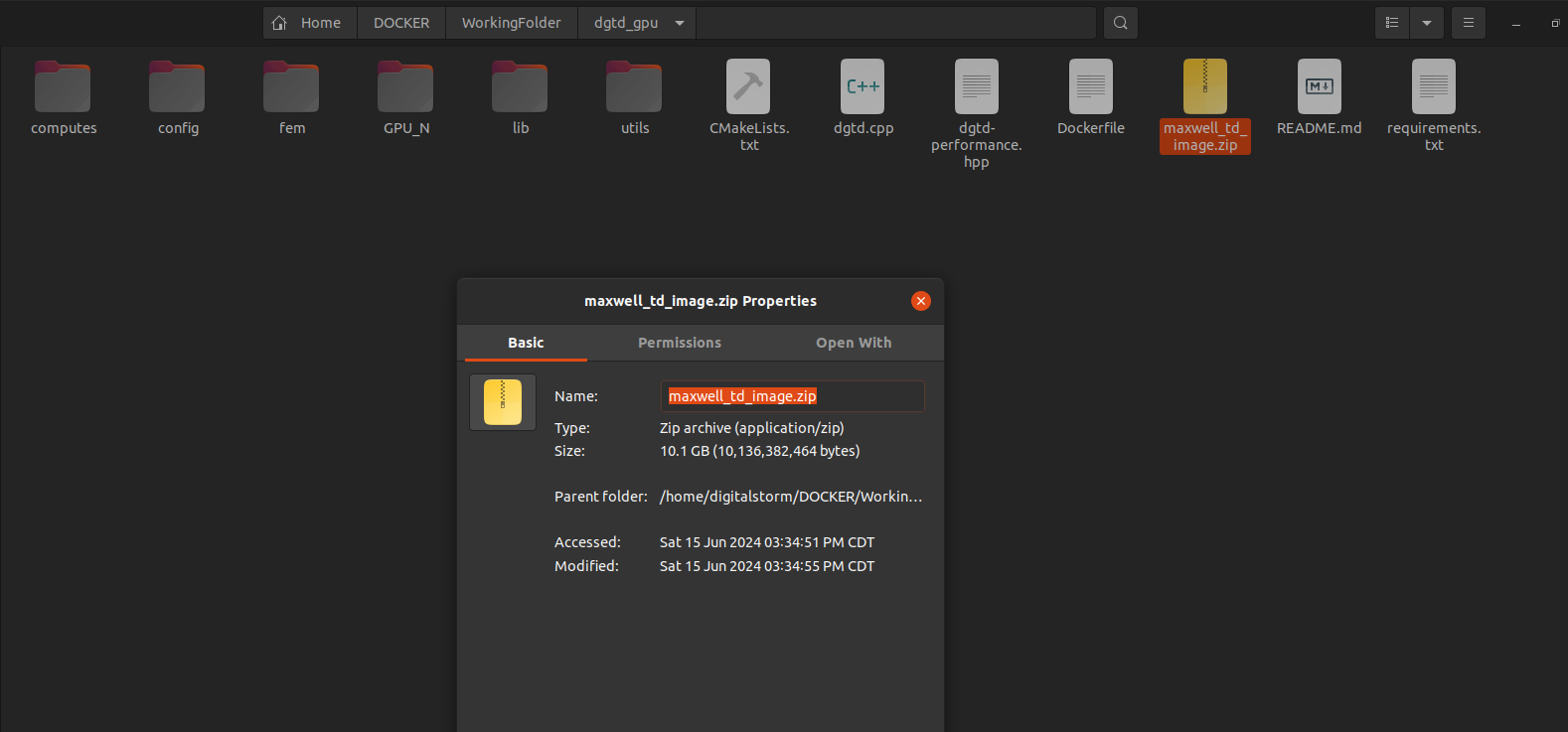

How to save built image locally

To save a Docker image locally as a tar archive, you'll use the docker save command. This command packages the Docker image into a tarball archive that can be transferred to other machines or stored for backup purposes. Here’s how you can do it:

- Open your terminal (Command Prompt on Windows or Terminal on macOS/Linux).

- Run the following command

docker save -o <output-file-name>.zip <image-name>Replace <output-file-name>.zip with the desired name for your zip archive file.

<image-name>: This specifies the Docker image you want to save.

- Confirmation:

After running the command, Docker will package the specified image into zip named <output-file-name>.zip. You should see the zipfile ( <output-file-name>.zip) in your current directory unless you specified a different path for the output.

Managing Docker Resources

Cleaning Up Unused Resources

To clean up any dangling resources (images, containers, volumes, networks), use the following command:

docker system pruneTo remove stopped containers and all unused images (not just dangling), add the -a flag:

docker system prune -aRemoving Docker Images

To remove specific images, list them using docker images -a and then delete them with docker rmi:

docker images -a # List all images

docker rmi Image Image # Remove specific images by ID or tagTo remove dangling images, use:

docker image pruneRemoving Docker Containers

To remove specific containers, list them using docker ps -a and then delete them with docker rm:

docker ps -a # List all containers

docker rm ID_or_Name ID_or_Name # Remove specific containers by ID or nameTo remove all exited containers, use:

docker rm $(docker ps -a -f status=exited -q)Removing Docker Volumes

To remove specific volumes, list them using docker volume ls and then delete them with `docker volume rm`:

docker volume ls # List all volumes

docker volume rm volume_name volume_name # Remove specific volumes by nameTo remove dangling volumes, use:

docker volume prune